1. Most Recent Work Overview

- Preprint in PDF (2.1 MBytes); © Copyright 2012 by IEEE.

For more information about our Formic board and its use for prototyping large multicore systems, please visit: http://formic-board.com

-

ABSTRACT:

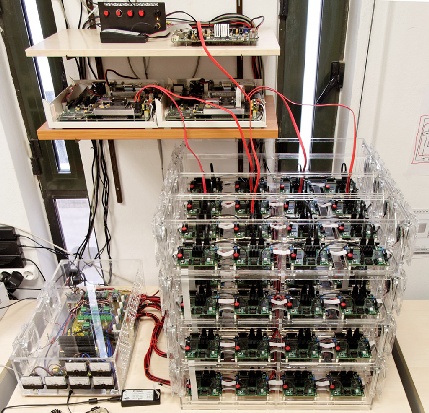

Modeling emerging multicore architectures is

challenging and imposes a tradeoff between simulation speed

and accuracy. An effective practice that balances both targets

well is to map the target architecture on FPGA platforms.

We find that accurate prototyping of hundreds of cores on

existing FPGA boards faces at least one of the following

problems: (i) limited fast memory resources (SRAM) to model

caches, (ii) insufficient inter-board connectivity for scaling the

design or (iii) the board is too expensive. We address these

shortcomings by designing a new FPGA board for multicore

architecture prototyping, which explicitly targets scalability

and cost-efficiency. Formic has a 35% bigger FPGA, three

times more SRAM, four times more links and costs at most

half as much when compared to the popular Xilinx XUPV5

prototyping platform. We build and test a 64-board system

by developing a 512-core, MicroBlaze-based, non-coherent

hardware prototype with DMA capabilities, with full networkon-

chip in a 3D-mesh topology. We believe that Formic offers

significant advantages over existing academic and commercial

platforms that can facilitate hardware prototyping for future

manycore architectures.

- Preprint in PDF (640 KBytes); © Copyright 2010 by IEEE.

-

ABSTRACT:

SARC merges cache controller and network interface functions

by relying on a single hardware primitive: each access checks the tag

and the state of the addressed line for possible occurrence of events

that may trigger responses like coherence actions, RDMA, synchronization,

or configurable event notifications. The fully virtualized and protected

user-level API is based on specially marked lines in the scratchpad space

that respond as command buffers, counters, or queues.

The runtime system maps communication abstractions

of the programming model to

data transfers among local memories using remote write or read DMA

and into task synchronization and scheduling using notifications,

counters, and queues. The on-chip network provides efficient

communication among these configurable memories,

using advanced topologies and routing algorithms,

and providing for process variability in NoC links.

We simulate benchmark kernels on a full-system simulator to compare speedup and

network traffic against cache-only systems with directory-based coherence

and prefetchers. Explicit communication provides 10 to 40% higher speedup on 64 cores,

and reduces network traffic by factors of 2 to 4,

thus economizing on energy and power;

lock and barrier latency is reduced by factors of 3 to 5.

- Preprint in PDF (370 KBytes); © Copyright 2010 by Springer.

| ABSTRACT: We present the hardware design and implementation of a local memory system for individual processors inside future chip multiprocessors (CMP). Our memory system supports both implicit communication via caches, and explicit communication via directly accessible local ("scratchpad") memories and remote DMA (RDMA). We provide run-time configurability of the SRAM blocks that lie near each processor, so that portions of them operate as 2nd level (local) cache, while the rest operate as scratchpad. We also strive to merge the communication subsystems required by the cache and scratchpad into one integrated Network Interface (NI) and Cache Controller (CC), in order to economize on circuits. The processor interacts with the NI at user-level through virtualized command areas in scratchpad; the NI uses a similar access mechanism to provide efficient support for two hardware synchronization primitives: counters, and queues. We describe the NI design, the hardware cost, and the latencies of our FPGA-based prototype implementation that integrates four MicroBlaze processors, each with 64 KBytes of local SRAM, a crossbar NoC, and a DRAM controller. One-way, end-to-end, user-level communication completes within about 20 clock cycles for short transfer sizes. |

|

| The prototype includes multiple Xilinx XUPV5 processor boards, containing 4 MicroBlaze cores per board, interconnected via a Xilinx ML325 switch board that contains 3 parallel crossbars, using 3 RocketIO (2.5 Gbps) links per board. | |

2. Support for Explicit Communication and Synchronization

- Preprint in PDF (390 KBytes); © Copyright 2010 by ACM.

-

ABSTRACT:

Per-core local (scratchpad) memories allow direct inter-core communication,

with latency and energy advantages over coherent cache-based communication,

especially as CMP architectures become more distributed.

We have designed cache-integrated network interfaces (NIs),

appropriate for scalable multicores,

that combine the best of two worlds

--the flexibility of caches and the efficiency of scratchpad memories:

on-chip SRAM is configurably shared among

caching, scratchpad, and virtualized NI functions.

This paper presents our architecture,

which provides local and remote scratchpad access,

to either individual words or multiword blocks through RDMA copy.

Furthermore, we introduce event responses,

as a mechanism for software configurable synchronization primitives.

We present three event response mechanisms

that expose NI functionality to software,

for multiword transfer initiation,

memory barriers for explicitly-selected accesses of arbitrary size,

and multi-party synchronization queues.

We implemented these mechanisms in a four-core FPGA prototype, and

evaluated the on-chip communication performance on the prototype

as well as on a CMP simulator with up to 128 cores.

We demonstrate efficient synchronization, low-overhead communication,

and amortized-overhead bulk transfers,

which allow parallelization gains for fine-grain tasks,

and efficient exploitation of the hardware bandwidth.

- Slides available in PDF (1.5 MBytes); © Copyright 2010 by FORTH.

-

ABSTRACT:

Tasks of parallel or parallelized programs

cooperate with each other by exchanging data,

which get transferred from one local or cache memory to another.

If we let hardware prefetchers and cache coherence

perform these transfers,

significant network bandwidth (and energy) are consumed,

especially under directory-based coherence,

and extra latencies occur

when prefetchers fail to correctly predict software behavior.

Recent advances in programming models and runtime systems

allow runtime libraries to know when specific software objects

should be transferred, from where to where,

during task scheduling and execution,

thus explicitely managing locality

and economizing on network packets and energy.

We argue that the runtime tables that contain such knowledge for explicit communication fulfill goals analogous to coherence directories, and can thus obviate hardware coherence. Furthermore, these runtime tables also serve functions analogous to page tables, and thus traditional virtual memory could perhaps be replaced by a simpler scheme, used for protection purposes only. In such new systems, the runtime instructs the hardware to replicate or migrate entire (variable-size) "objects", rather than individual cache lines or pages one at a time. When a large data structure spans several such objects, inter-object pointers are a problem. We argue for a new breed of parallel data structures and algorithms that operate in units of objects that are larger than the traditional small data structure nodes, in a way analogous to what the data base community has done long time ago for disk-resident data.

- Slides available in PDF (130 KBytes); © Copyright 2008 by FORTH.

- Available in PDF (3.7 MBytes) - Slides in PDF (40 KBytes); © Copyright 2007 by FORTH.

- Available in PDF (420 KBytes); © Copyright 2009 by UPC, BSC, and FORTH.

3. Hardware Prototypes for Interprocessor Communication Mechanisms

3.1 Tightly-coupled Network Interfaces (2008-2010)

- Preprint in PDF (440 KBytes) © Copyright 2009 by IEEE; Slides in PDF (550 KBytes) © Copyright 2009 by FORTH.

-

This conference paper is extended and superseeded by the

Transactions of HiPEAC journal paper.

- Available in PDF (270 KBytes) - Slides in PDF (210 KBytes) © Copyright 2008 by FORTH.

-

This paper is superseeded by the

Transactions of HiPEAC journal paper.

3.2 Loosely-coupled Network Interfaces (2006-2007)

- Preprint in PDF (130 KBytes); © Copyright 2007 by IEEE.

| ABSTRACT: Parallel computing systems are becoming widespread and grow in sophistication. Besides simulation, rapid system prototyping becomes important in designing and evaluating their architecture. We present an efficient FPGA-based platform that we developed and use for research and experimentation on high speed interprocessor communication, network interfaces and interconnects. Our platform supports advanced communication capabilities such as Remote DMA, Remote Queues, zero-copy data delivery and flexible notification mechanisms, as well as link bundling for increased performance. We report on the platform architecture, its design cost, complexity and performance (latency and throughput). We also report our experiences from implementing benchmarking kernels and a user-level benchmark application, and show how software can take advantage of the provided features, but also expose the weaknesses of the system. |

|

| The prototype includes eight x86 nodes, each with a 10Gbps PCI-X RDMA-capable NIC (DiniGroup Virtex-II Pro boards), interconnected via four Xilinx ML325 switch boards (variable-size buffered crossbars), using four RocketIO (2.5 Gbps) links per node. | |

- Available in PDF (200 KBytes) - Slides in PDF (1 MByte) © Copyright 2006 by FORTH.

-

This paper is superseeded by the

IEEE IC-SAMOS 2007 conference paper.

4. Other Papers, Posters, and Related Work

- Preprint in PDF (430 KBytes) © Copyright 2010 by IEEE.

- Poster in PDF (660 KBytes) © Copyright 2010 by FORTH.

- Available in PDF (290 KBytes); © Copyright 2010 by FORTH.

- Preprint in PDF (210 KBytes); © Copyright 2007 by ACM.

- Preprint in PDF (120 KBytes); © Copyright 2006 by IEEE.

- Preprint in PDF (110 KBytes); © Copyright 2006 by ACM.

5. Past Work on IPC: The Telegraphos Project (1993-97)

Telegraphos -- from the Greek words ``tele'' (remote) and ``grapho'' (write) -- was a project on low-latency, high-throughput interprocessor communication. During that project, in 1993-1997, at FORTH-ICS CARV Laboratory, workstation clustering prototypes were designed and built, including processor-network interfaces for remote-write based, protected, user-level communication.

Projects - Funding - Acknowledgements

This work is currently (2010-2012) being conducted mostly within the ENCORE (#248647) project on "ENabling technologies for a programmable many-CORE", and in cooperation with the TEXT (#261580) project, both funded by the European Union FP7 Programme. In the period 2006-2009, this work was conducted mostly within the SARC European integrated project on "Scalable computer ARChitecture", funded by the European Union FP6 Programme (#027648). Financial support, especially for hardware prototyping, was also provided by the FP6 Marie-Curie project UNiSIX (MC #509595). Our work in general, and the ENCORE and SARC projects in particular, are within the framework of the HiPEAC Network of Excellence.

Angelos Bilas, Alex Ramirez, and Georgi Gaydadjiev helped us shape our ideas; we deeply thank them. We also thank, for their participation and assistance: M. Ligerakis, M. Marazakis, M. Papamichael, E. Vlahos, G. Mihelogiannakis, and A. Ioannou.

We also deeply thank the Xilinx University Program for donating to us a number of FPGA chips, boards, and licences for the Xilinx EDA tools.

© Copyright 2006-2012 by IEEE or ACM or Springer or FORTH:

These papers are protected by copyright. Permission to make digital/hard copies of all or part of this material without fee is granted provided that the copies are made for personal use, they are not made or distributed for profit or commercial advantage, the IEEE or ACM or Springer or FORTH copyright notice, the title of the publication and its date appear, and notice is given that copying is by permission of the IEEE or of the ACM or of the Springer or of the Foundation for Research & Technology - Hellas (FORTH), as appropriate. To copy otherwise, in whole or in part, to republish, to post on servers, or to redistribute to lists, requires prior specific written permission and/or a fee.